Some of these documents are protected by various copyright laws, and they may be used for personal research use only. Your click on any of the links below constitutes your request for a personal copy of the linked article, and results in our delivery of a personal copy. Any other use is prohibited. You can also request copies of these papers by emailing kcave@umass.edu.

Statistical Summary Representations

The visual system is quite adept at extracting summary information about the basic visual properties of a large number of similar objects grouped together. A series of experiments by Sandarsh Pandey in our lab explores the creation of these summary statistical representations, and how it interacts with other visual processes.

Directing Visual Search to a Target Object

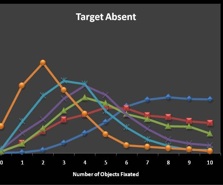

When we are seaching for a specific target object, search is guided by a mental representation, or template, of that target object. We want to understand what information is represented in these target templates and how it is used in guiding search. Under some conditions when subjects are searching for two or more colors simultaneously, or searching for one color while holding another color in visual working memory, their search guidance is impaired. These results demonstrate that attentional guidance is often not as effective as it might be.

- Chang, J., Stone, L., & Cave, K.R. (2019). Experience with Difficult Dual-Color Search Can Promote a Shift to a Single Range Target Representation. Psychonomic Society 60th annual meeting, Montreal, Quebec, Canada, Nov, 2019.

- Meneer, T., Cave, K.R., Kaplan, E., Stroud, M.,J, Chang, J., & Donnelly, N. (2019). The relationship between working memory and the dual-target cost in visual search guidance. Journal of Experimental Psychology: Human Perception and Performance, 45(7), 911-935.

manuscript as accepted

published version (when available) doi:10.1037/xhp0000643 - Stroud, M.,J, Meneer, T., Kaplan, E., Cave, K.R., & Donnelly, N. (2019). We can guide search by a set of colors, but are reluctant to do it. Attention, Perception, & Psychophysics, 871, 377-406.

manuscript as accepted

published version doi:0.3758/s13414-018-1617-5 - Cave, K.R., Meneer, T., Nomani, M.S., Stroud, M.,J, & Donnelly, N. (2018). Dual target search is neither purely simultaneous nor purely successive. Quarterly Journal of Experimental Psychology.

- Chang, J., Cave, K.R., Menneer, T., Kaplan, E., & Donnelly, N. (2016). How target/distractor discriminability affects search guidance strategy. Psychonomic Society 57th annual meeting, Boston, MA, Nov, 2016.

- Menneer, T., Cave, K.R., Stroud, M.J., Kaplan, E., Donnelly, N. Modeling search guidance, three parameters for characterizing performance in different types of visual search. Vision Sciences Society 15th annual meeting, St. Pete Beach, FL, May, 2015.

- Stroud, M.J., Menneer, T., Cave, K.R., & Donnelly, N. (2012). Using the dual-target cost to explore the nature of search target representations. Journal of Experimental Psychology: Human Perception and Performance, 38, 113-122.

- Stroud, M.J., Menneer, T., Cave, K.R., Donnelly, N. & Rayner, K. (2011). Search for multiple targets of different colours: Misguided eye movements reveal of reduction of colour selectivity. Applied Cognitive Psychology, 25, 971-982.

Horizontal Benefit and Horizontal Attentional Set

Object-based attention is sometimes demonstrated with a task in which two shapes are compared after they appear within a pair of rectangles similar to those used by Egly, Driver, and Rafal (1994). We have found that performance on these visual comparisons is affected by two different effects, neither of which arise from object-based attention. First, the two shapes are compared more easily if they are arranged horizontally (the Horizontal Benefit). Second, when a cue indicates that the two stimuli will be arranged horizontally, but does not indicate which locations they will occupy, performance is worse if the stimulus configuration is vertical rather than horizontal (the Horizontal Attentional Set). These effects demonstrate unusual aspects of the visual comparison process, and they have implications for the interpretation of results from object-based attention experiments.

- Chen, Z. & Cave, K.R. (2019). When is object-based attention not based on objects? Journal of Experimental Psychology: Human Perception and Performance, 45(8), 1062-1082.

manuscript as accepted

published version (when available) doi:10.1037/xhp0000657 - Chen, Z., Humphries, A., & Cave, K.R. (2019). Location-specific orientation set is independent of the horizontal benefit with or without object boundaries, Vision, 3(2), 30. doi:10.3390/vision3020030

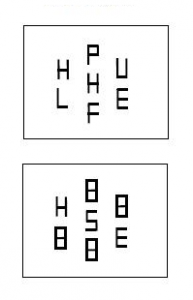

Bias in Comparing Visual Objects

We found evidence for two kinds of bias in tasks that require subjects to view and compare two sequentially presented letter strings. The first is a spatial congruency bias, which has also been demonstrated by Golomb et. al (2014), although this bias has not previously been demonstrated with letter strings. Subjects are more likely to report two strings as being the same letters if they both appear at the same location. The second type of bias has not previously been demonstrated. We found that subjects are more likely to report two strings as different if they are performing a more analytical comparison that requires comparison of individual letters than if they are performing a more holistic comparison in which each string can be processed as a unified whole. Both types of bias provide clues about how visual objects are identified and compared.

- Cave, K.R. & Chen, Z. (2017). Two kinds of bias in visual comparison illustrate the role of location and holistic/analytic processing differences. Attention, Perception, & Psychophysics, 79, 2354–2375.

manuscript as accepted

published version doi:10.3758/s13414-017-1405-7

Interference Among Visual Objects: Perceptual Load and Attentional Zoom

When multiple objects appear together in a scene, they can interfere with one another. Processing a target object becomes more difficult as more nontargets are added to a display. Perceptual Load Theory was an attempt to explain the interactions among targets and nontargets based on the relevance of different objects to the task. Experiments showing interference from irrelevant objects led to an alternative account based on dilution. We present evidence that is not consistent with either theory, and explain the results by assuming that stimuli with more nontargets require a narrowing of attentional zoom.

- Cave, K.R. & Chen, Z. (2016). Identifying visual targets amongst interfering distractors: sorting out the roles of perceptual load, dilution, and attentional zoom. Attention, perception, & Psychophysics, 78, 1822.

manuscript as accepted

published version doi:10.3758/s13414-016-1149-9 - Chen, Z., & Cave, K.R. (2016). Zooming in on the Cause of the Perceptual Load Effect in the Go/Nogo Paradigm. Journal of Experimental Psychology: Human Perception and Performance.

- Chen, Z. & Cave, K.R. (2014). Constraints on dilution from a narrow attentional zoom reveal how spatial and color cues direct selection. Vision Research. 101, 125-137.

- Chen, Z. & Cave, K.R. (2013). Perceptual load vs. dilution: the roles of attentional focus, stimulus category and target predictability. Frontiers in Psychology, 4, 327.

Improving Performance in Security Search

We first explored dual-target costs in searches of x-ray images for threats such as guns, knives, and IEDs (explosive devices). Our work suggests that search performance would improve if each screener specialized in searching for specific types of threats.

- Donnelly, N., Muhl-Richardson, A., Godwin, H.J., & Cave, K.R. (2019). Using eye movements to understand how security screeners search for threats in x-ray baggage. Vision, 3(2), 24. doi:10.3390/vision3020024

- Meneer, T., Stroud, M.,J, Cave, K.R., Li, X., Godwin, H.J., Liversedge, S.P., & Donnelly, N. (2012). Search for two categories of target produces fewer fixations to target-color items. Journal of Experimental Psychology: Applied, 18, 404-418.

- Menneer, T., Cave, K.R., & Donnelly, N. (2009). The cost of searching for multiple targets: The effects of practice and target similarity. Journal of Experimental Psychology: Applied, 15, 125-139.

- Menneer, T., Barrrett, D.J.K., Phillips, L., Donnelly, N., & Cave, K.R. (2007). Costs in searching for two targets: Dividing search across target types could improve airport security screening. Applied Cognitive Psychology, 21, 915-932.

- Menneer, T., Barrett, D.J.K., Phillips, L., Donnelly, N., & Cave, K.R. (2004). Search efficiency for multiple targets. Cognitive Technology, 9, 22-25.

- Menneer, T., Auckland, M., Cave, K.R., & Donnelly, N. Searching for multiple threat items reduces search performance. The Fourth International Aviation Security Technology Symposium, Washington, D.C., November, 2006.

Visual Search for Categories

In many real-world searches, we do not know the exact appearance of the target, but only its general category. We have been testing how search performance when the target is a typical or an atypical member of its category. Surprisingly, eye movements show that the difference is more important in verifying the target once it is found rather than in initially finding the target.

- Castelhano, M.S., Pollatsek, A., & Cave, K.R. (2008). Typicality Aids Search for an Unspecified Target, but Only in Identification, and not in Attentional Guidance. Psychonomic Bulletin and Review, 15, 795-801.

Object-Based Attention

Our results show that the allocation is shaped by the presence of object boundaries even when attention is focused on a known target location, suggesting that object-based attention is an enhancement of perceptual processing rather than a prioritization of visual search. Interestingly, though, object-based attention can appear and  disappear depending on a number of factors, including previous experience with a stimulus configuration, and the timing of the appearance of object boundaries.

disappear depending on a number of factors, including previous experience with a stimulus configuration, and the timing of the appearance of object boundaries.

- Chen, Z., & Cave, K.R. (2008). Object-based attention with endogenous cuing and positional certainty. Perception & Psychophysics, 70, 1435-1443.

- Chen, Z., & Cave, K.R. (2006). Reinstating object-based attention under positional certainty: the importance of subjective parsing. Perception & Psychophysics, 68, 992-1003.

Attention to Partially Occluded Objects

We have found that when part of an object is occluded, the occluded region is inhibited relative to the visible regions of that object. This “Occlusion Inhibition” shows that the allocation of covert attention can be driven by object-specific goals and knowledge. However, occlusion inhibition does not occur in every visual task. Like object-based attention described above, it can appear or disappear depending on the nature of the task. Understanding when it arises and when it does not will be key to understanding the interaction between attention, locations, and object representations.

- Chambers, D., & Cave, K.R. (2008). Factors governing inhibition of occluded regions in superimposed objects. In Castelhano, M., Franconeri, S., Curby, K. and Shomstein, S. (organizers) ‘Object Perception, Attention, and Memory 2007 Conference Report 15th Annual Meeting, Long Beach, California, USA’, Visual Cognition, 16, 90-143.

- Chambers, D. (2009). Understanding Occlusion Inhibition: A Study of the Visual Processing of Superimposed Figures. Doctoral dissertation, University of Massachusetts Amherst.

Gradients in Spatial Attention

In some tasks, attention is allocated very precisely to a target location. In many cuing tasks, however, attention spills over to affect locations far away. In collaboration with the UMass NeuroCognition and Perception Lab, we are using ERP measures to map the gradients of attention. We are finding surprising differences in the effects of attention across different levels of visual processing.

- Bush, W., Sanders, L., & Cave, K.R. Spatial distribution of visual attention across cued and uncued locations: and ERP study. The Cognitive Neuroscience Society Annual Meeting, New York, NY, May, 2007.

Context Effects in Object Recognition

We have explored how the recognition of a visual object is aided by the presence of other semantically related objects. These context objects do not have to form a coherent scene to help in identification.

- Auckland, M.E., Cave, K.R., & Donnelly, N. (2007). Non-target objects can influence perceptual processes during object recognition. Psychonomic Bulletin and Review, 14, 332-337.

Visual Search Within Structured Arrays

Most visual search experiments use arrays of search elements that are randomly arranged, while the scenes within which real search is done are very structured. We have begun exploring the area between these two extremes, with search within arrays of groups of objects that are clustered together. The results reveal that search  from cluster to cluster is driven differently than search within a cluster, and that this control is sensitive to subtle regularities in where search targets appear. Control of attention in complex scenes probably involves multiple levels that are not captured in simple search experiments.

from cluster to cluster is driven differently than search within a cluster, and that this control is sensitive to subtle regularities in where search targets appear. Control of attention in complex scenes probably involves multiple levels that are not captured in simple search experiments.

- Williams, C.C., Pollatsek, A., Cave, K.R., & Stroud, M.J. (2009). More than Just Finding Color: Strategy in Global Visual Search is Shaped by Learned Target Probabilities. Journal of Experimental Psychology: Human Perception and Performance, 35, 688-699.