On February 6th, Gaja Jarosz gave an invited talk at the Old World Conference in Phonology in her hometown of Warsaw, Poland. The talk, titled “Embracing Ambiguity: Quantitative Modeling in Phonology“, was live-streamed on YouTube and also made available on StreamGram.

Author Archives: Gaja Jarosz

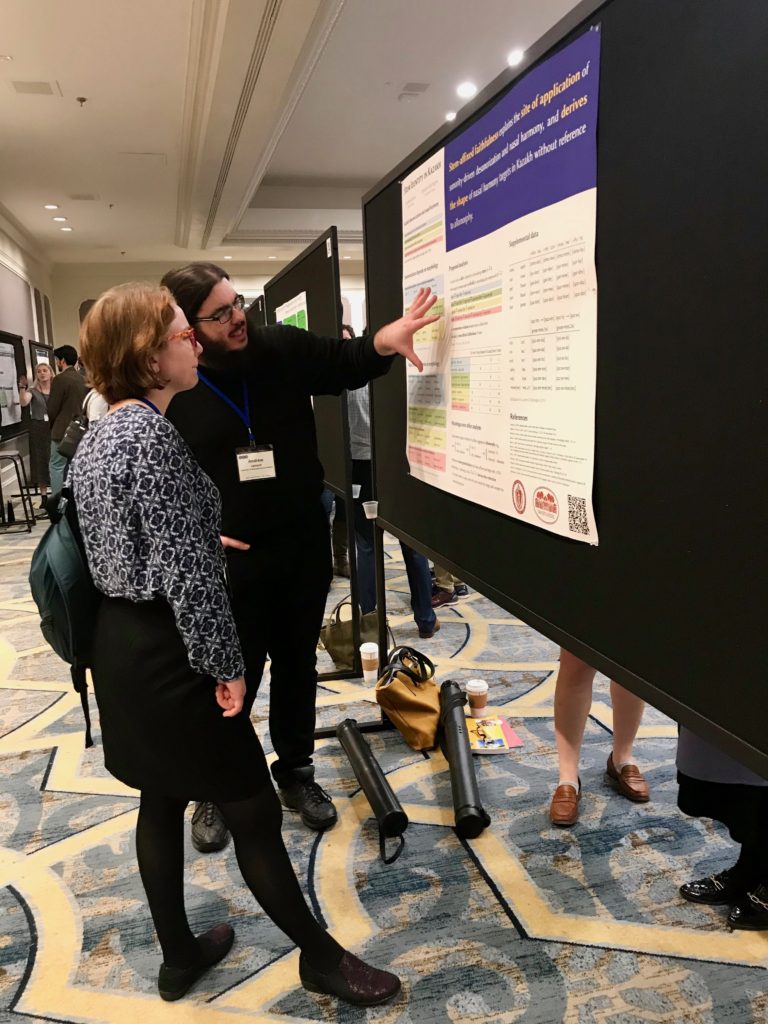

UMass Linguists at the LSA and SCiL!

UMass was very well represented at the Linguistic Society of America 202o Annual Meeting and the Third Annual Meeting of the Society for Computation in Linguistics, January 2-5, New Orleans, LA.

Several current faculty and students presented at the LSA (Chris Hammerly, Andrew Lamont, Alex Göbel, Deniz Ozyildiz, Michael Becker, Brandon Prickett, Shay Hucklebridge, Kimberly Johnson, Tom Roeper, Leland Kusmer, Lisa Green) and SCiL (Max Nelson, Brandon Prickett), which was co-organized by Gaja Jarosz and Joe Pater. And many alumni did as well, including Ivy Hauser, Jennifer Smith, Elliott Moreton, Andries Coetzee, Amy-Rose Deal, Chris Potts, and Tracy Conner.

UMass students in action at the LSA:

And some New Orleans highlights:

CLC Talk on Unsupervised Learning of Phrase Structure – November 15 @ 4pm

The first CLC (Computational Linguistics Community) event of the semester will be a talk on unsupervised learning of phrase structure. The talk will be at 4pm on November 15th and will take place as part of the new Neurolinguistics Reading group. All are welcome! Please see below for more details.

TITLE:

Unsupervised Latent Tree Induction with Deep Inside-Outside Recursive Auto-Encoders

AUTHORS:

Andrew Drozdov*, Pat Verga*, Mohit Yadav*, Mohit Iyyer, Andrew McCallum

ABSTRACT:

Syntax is a powerful abstraction for language understanding. Many downstream tasks require segmenting input text into meaningful constituent chunks (e.g., noun phrases or entities); more generally, models for learning semantic representations of text benefit from integrating syntax in the form of parse trees (e.g., tree-LSTMs). Supervised parsers have traditionally been used to obtain these trees, but lately interest has increased in unsupervised methods that induce syntactic representations directly from unlabeled text. To this end, we propose the deep inside-outside recursive auto-encoder (DIORA), a fully-unsupervised method for discovering syntax that simultaneously learns representations for constituents within the induced tree. Unlike many prior approaches, DIORA does not rely on supervision from auxiliary downstream tasks and is thus not constrained to particular domains. Furthermore, competing approaches do not learn explicit phrase representations along with tree structures, which limits their applicability to phrase-based tasks. Extensive experiments on unsupervised parsing, segmentation, and phrase clustering demonstrate the efficacy of our method. DIORA achieves the state of the art in unsupervised parsing (48.7 F1) on the benchmark WSJ dataset.

LOCATION:

ILC N400

UMass Linguists and Alumni at AMP 2018

UMass was well represented at the Annual Meetings of Phonology (AMP) in San Diego, Oct 5-7. Several current students and faculty gave poster presentations: Ivy Hauser presented on “ Effects of phonological contrast on phonetic variation in Hindi and English stops”, Andrew Lamont on “Majority Rule in Harmonic Serialism”, and Claire-Moore Cantwell, Joe Pater, Robert Staubs, Benjamin Zobel, Lisa Sanders on “Event-related potential evidence of abstract phonological learning in the laboratory”. Alumni Michael Becker (not pictured), Gillian Gallagher (not pictured) (with Maria Gouskova), Nancy Hall, Armin Mester, Junko Ito, and Joanna Zaleska gave presentations, and Gaja Jarosz, Aleksei Nazarov, Amanda Rysling, and Brian Smith were also in attendance.

Richard Futrell in CLC/Psycholing Workshop, Friday, April 27

All are welcome at the final CLC event this spring: Richard Futrell (MIT, BCS) will speak at Psycholinguistics Workshop on Friday, April 27th, 10am-11am, in ILC N400. Richard will also be available for individual meetings – please contact Chris Hammerly to set up an appointment. See below for abstract and title.

Memory and Locality in Natural Language

April 27th (Fri), 10am-11am, ILC N400 (Psycholing Workshop)

I explore the hypothesis that the universal properties of human languages can be explained in terms of efficient communication given fixed human information processing constraints. First, I show corpus evidence from 54 languages that word order in grammar and usage is shaped by working memory constraints in the form of dependency locality: a pressure for syntactically linked words to be close to one another in linear order. Next, I develop a new theory of human language processing cost, based on rational inference in a noisy channel, that unifies surprisal and memory effects and goes beyond dependency locality to a new principle of information locality: that words that predict each other should be close. I show corpus evidence for information locality. Finally, I show that the new processing model resolves a long-standing paradox in the psycholinguistic literature, structural forgetting, where the effects of memory on language processing appear to be language-dependent.

Practice Talks & Posters for PhoNE

On Monday, March 26th, we will have a practice talk and three practice posters for this year’s PhoNE. All are welcome.

The practice talk will take place in Sound Workshop, 10am-11am, in N451:

- Andrew Lamont – “Precedence is Pathological” (talk)

- Katie Tetzloff – “Exceptionality in Spanish onset clusters” (poster)

- Brandon Prickett – “Experimental Evidence for Biases in Phonological Rule Interaction” (poster)

- Jelena Stojkovi? – “OCP and Intra-stratal Opacity of Case Marking” (poster)

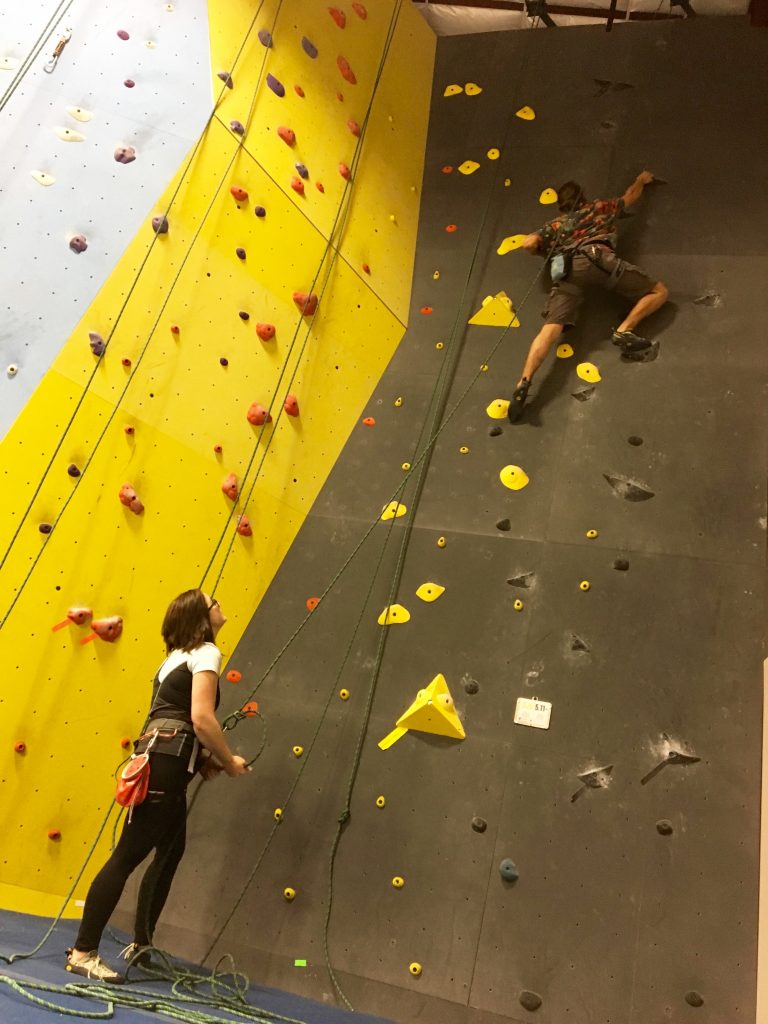

Climbing Linguists

Our group of climbing linguists has grown so much that we have created an official mailing list: CLING. We regularly climb at the Central Rock Gym in Hadley and sometimes venture outdoors and attend other climbing events. If you’d like to join the CLING mailing list and keep updated about climbing events, email Gaja.

Spring 2018 Computational Linguistics Community (CLC) Events

See below for our exciting line-up of CLC events this semester. All welcome! Mark your calendars!

- Soroush Vosoughi (MIT) Data Science Seminar talk

- Feb 22nd at 4pm, CS 150/1

- COLING Paper Clinic

- February 28th (Wed), 4-5pm, CS 303 (NLP Reading Group)

- Yulia Tsvetkov (CMU Computer Science)

- March 1st (Thu), 12pm, CS 150/1 (MLFL)

- Yelena Mejova (QCRI) iSchool Seminar talk

- March 6th, at 4pm, CS 150/1

- Brian Dillon (UMass Linguistics) on “Syntactic Frequency Effects in Recognition Memory”

- March

9th30th (Fri), 12:20-1:20, ILC N451 (Experimental Lab)

- March

- Michael Becker (Stony Brook Linguistics) on Modeling Arabic Plurals

- April 9th (Mon), 10am-11am, ILC N451 (Sound Workshop)

- Richard Futrell (MIT BCS) title TBA

- April 27th (Fri), 10am-11am, ILC N400 (Psycholing Workshop)

Upcoming Computational Linguistics Community Events

Please join us for two upcoming CLC events!

- Students in Cognitive Modeling (Ling 692c) present their final projects

- Dec 8, 9-10am in ILC N400 (Psycholinguistics Workshop)

- Students will give 5 minute presentations about their final class projects involving computational modeling of some psycholinguistic task or phenomenon

- Students in Intro NLP (CS 585) give poster presentations of their final projects

- Dec 12, 3:30-5pm (session 1) and 5-6:30 (session 2), in CS room 150/151

- See description below from Brendan O’Connor

CS 585 Poster Sessions

Prickett and Lamont at NECPhon 2017

Brandon Prickett and Andrew Lamont will be presenting papers at NECPhon 2017 at Stony Brook on October 21st. Brandon’s talk is titled “Learning biases in opaque interactions”, and Andrew’s is “Subsequential steps to unbounded tonal plateauing”. Follow the link to see the full schedule and to register (by Oct 16th).