Ever since Quine’s “On What There Is”, discussions of the types of variables in natural languages have occupied a special place in semantics. According to Quine, “to be assumed as an entity is, purely and simply, to be reckoned as the value of a variable.” After eleven years in the archives, Meredith Landman’s landmark 2006 dissertation on Variables in Natural Language has now been made publicly available on ScholarWorks. Landman’s dissertation argues for severe type restrictions for object language variables in natural languages, targeting pro-forms of various kinds, elided constituents, and traces of movement.

In his 1984 UMass dissertation Gennaro Chierchia had already proposed the ‘No Functor Anaphora Constraint’, which says that ‘functors’ (e.g. determiners, connectives, prepositions) do not enter anaphoric relationships.  Landman’s dissertation goes further in arguing for a constraint that affects all object language variables and also rules out properties as possible values for them. Her ‘No Higher Types Variable Constraint’ (NHTV) restricts object language variables to the semantic type e of individuals.

Landman’s dissertation goes further in arguing for a constraint that affects all object language variables and also rules out properties as possible values for them. Her ‘No Higher Types Variable Constraint’ (NHTV) restricts object language variables to the semantic type e of individuals.

Landman explores the consequences of the NHTV for the values of overt pro-forms like such or do so, as well as for gaps of A’-movement and for NP and VP ellipsis. Since the NHTV bars higher type variables in all of those cases, languages might have to use strategies like overt pro-forms or partial or total syntactic reconstruction of the antecedent to interpret certain types of movement gaps and elided constituents. The NHTV thus validates previous work arguing for syntactic reconstruction and against the use of higher-type variables (e.g. Romero 1998 and Fox 1999, 2000), as well as work arguing for treating ellipsis as involving deletion of syntactic structure.

The topic of the type of traces has most recently been taken up again in Ethan Poole’s 2017 UMass dissertation, which contributes important new evidence confirming that the type of traces should indeed be restricted to type e.

(This post was crafted in collaboration with Meredith Landman, who also provided the pictures).

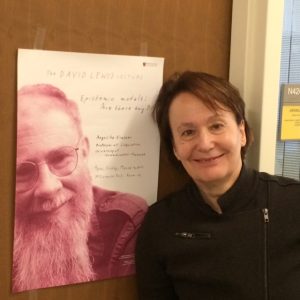

I feel so honored and happy to be giving the 2017 David Lewis Lecture in Princeton. David Lewis was the most important influence on me as I was mapping out the path I wanted to take as a linguist and semanticist. Mysteriously, the handwriting on the poster is Lewis’s very own handwriting.

I feel so honored and happy to be giving the 2017 David Lewis Lecture in Princeton. David Lewis was the most important influence on me as I was mapping out the path I wanted to take as a linguist and semanticist. Mysteriously, the handwriting on the poster is Lewis’s very own handwriting.