Whether you came to college with an old laptop, or want to buy a new one without breaking the bank, making our basic computers faster is something we’ve all thought about at some point. This article will show you some software tips and tricks to improve your gaming experience without losing your shirt, and at the end I’ll mention some budget hardware changes you can make to your laptop. First off, we’re going to talk about in-game settings.

In-Game Settings:

All games have built in settings to alter the individual user experience from controls to graphics to audio. We’ll be talking about graphics settings in this section, primarily the hardware intensive ones that don’t compromise the look of the game as much as others. This can also depend on the game and your individual GPU, so it can be helpful to research specific settings from other users in similar positions.

V-Sync:

V-Sync, or Vertical Synchronization, allows a game to synchronize the framerate with that of your monitor. Enabling this setting will increase the smoothness of the game. However, for lower end computers, you may be happy to just run the game at a stable FPS that is less than your monitor’s refresh rate. (Note – most monitors have a 60Hz or 60 FPS refresh rate). For that reason, you may want to disable it to allow for more stable low FPS performance.

Anti-Aliasing:

Anti-Aliasing, or AA for short, is a rendering option which reduces the jaggedness of lines in-game. Unfortunately the additional smoothness heavily impacts hardware usage, and disabling this while keeping other things like texture quality or draw distance higher can make big performance improvements without hurting a game’s appearance too much. Additionally, there are many different kinds of AA options that games might have settings for. MSAA (Multisampling AA), and the even more intensive, TXAA (Temporal AA), are both better smoothing processes that have an even bigger impact on performance. Therefore turning these off on lower-end machines is almost always a must. FXAA (Fast Approximate AA) uses the least processing power, and can therefore be a nice setting to leave on if your computer can handle it.

Anisotropic Filtering (AF):

This setting adds depth of field to a game, by making things further away from your character blurrier. Making things blurrier might seem like it would make things faster, however it actually puts a greater strain on your system as it needs to make additional calculations to initiate the affect. Shutting this off can yield improvements in performance, and some players even prefer it, as it allows them to see distant objects more clearly.

Other Settings:

While the aforementioned are the heaviest hitters in terms of performance, changing some other settings can help increase stability and performance too (beyond just simple texture quality and draw distance tweaks). Shadows and reflections are often unnoticed compared to other effects, so while you may not need to turn them off, turning them down can definitely make an impact. Motion blur should be turned off completely, as it can make quick movements result in heavy lag spikes.

Individual Tweaks:

The guide above is a good starting point for graphics settings; because there are so many different models, there are any equally large number of combinations of settings. From this point, you can start to increase settings slowly to find the sweet spot between performance and quality.

Software:

Before we talk about some more advanced tips, it’s good practice to close applications that you are not using to increase free CPU, Memory, and Disk space. This alone will help immensely in allowing games to run better on your system.

Task Manager Basics:

Assuming you’ve tried to game on a slower computer, you’ll know how annoying it is when the game is running fine and suddenly everything slows down to slideshow speed and you fall off a cliff. Chances are that this kind of lag spike is caused by other “tasks” running in the background, and preventing the game you are running from using the power it needs to keep going. Or perhaps your computer has been on for awhile, so when you start the game, it runs slower than its maximum speed. Even though you hit the “X” button on a window, what’s called the “process tree” may not have been completely terminated. (Think of this like cutting down a weed but leaving the roots.) This can result in more resources being taken up by idle programs that you aren’t using right now. It’s at this point that Task Manager becomes your best friend. To open Task Manager, simply press CTRL + SHIFT + ESC at the same time or press CTRL + ALT + DEL at the same time and select Task Manager from the menu. When it first appears, you’ll notice that only the programs you have open will appear; click the “More Details” Button at the bottom of the window to expand Task Manager. Now you’ll see a series of tabs, the first one being “Processes” – which gives you an excellent overview of everything your CPU, Memory, Disk, and Network are crunching on. Clicking on any of these will bring the process using the highest amount of each resource to the top of the column. Now you can see what’s really using your computer’s processing power. It is important to realize that many of these processes are part of your operating system, and therefore cannot be terminated without causing system instability. However things like Google Chrome and other applications can be closed by right-clicking and hitting “End Task”. If you’re ever unsure of whether you can end a process or not safely, a quick google of the process in question will most likely point you in the right direction.

Startup Processes:

Here is where you can really make a difference to your computer’s overall performance, not just for gaming. From Task Manager, if you select the “Startup” tab, you will see a list of all programs and services that can start when your computer is turned on. Task Manager will give an impact rating of how much each task slows down your computers boot time. The gaming app Steam, for example, can noticeably slow down a computer on startup. A good rule of thumb is to allow virus protection to start with Windows, however everything else is up to individual preference. Shutting down these processes on startup can prevent unnecessary tasks from ever being opened, and allow for more hardware resource availability for gaming.

Power Usage:

You probably know that unlike desktops, laptops contain a battery. What you may not know is that you can alter your battery’s behavior to increase performance, as long as you don’t mind it draining a little faster. On the taskbar, which is by default located at the bottom of your screen, you will notice a collection of small icons next to the date and time on the right, one of which looks like a battery. Left-clicking will bring up the menu shown below, however right-clicking will bring up a menu with an option “Power Options” on it.

Clicking this will bring up a settings window which allows you to change and customize your power plan for your needs. By default it is set to “Balanced”, but changing to “High Performance” can increase your computer’s gaming potential significantly. Be warned that battery duration will decrease on the High Performance setting, although it is possible to change the battery’s behavior separately for when your computer is using the battery or plugged in.

Hardware:

Unlike desktops, for laptops there are not many upgrade paths. However one option exists for almost every computer that can have a massive effect on performance if you’re willing to spend a little extra.

Hard Disk (HDD) to Solid State (SSD) Drive Upgrade:

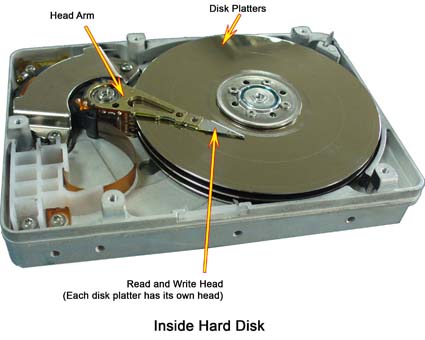

Chances are that if you have a budget computer, it probably came with a traditional spinning hard drive. For manufacturers, this makes sense as they are cheaper than solid states, and work perfectly well for light use. Games can be very demanding on laptop HDDs to recall and store data very quickly, sometimes causing them to fall behind. Additionally, laptops have motion sensors built into them which restrict read/write capabilities when the computer is in motion to prevent damage to the spinning disk inside the HDD. An upgrade to a SSD not only eliminates this restriction, but also has a much faster read/write time due to the lack of any moving parts. Although SSDs can get quite expensive depending on the size you want, companies such as Crucial or Kingston offer a comparatively cheap solution to Samsung or Intel while still giving you the core benefits of a SSD. Although there are a plethora of tutorials online demonstrating how to install a new drive into your laptop, make sure you’re comfortable with all the dangers before attempting, or simply take your laptop into a repair store to have them do it for you. It’s worth mentioning that when you install a new drive, you will need to reinstall Windows, and all your applications from your old drive.

Memory Upgrade (RAM):

Some laptops have an extra memory slot, or just ship with a lower capacity than what they are capable of holding. Most budget laptops will ship with 4GB of memory, which is often not enough to support both the system, and a game.

Upgrading or increasing memory can give your computer more headroom to process and store data without lagging up your entire system. Unlike with SSD upgrades, memory is very specific and it is very easy to buy a new stick that fits in your computer, but does not function with its other components. It is therefore critical to do your research before buying any more memory for your computer; that includes finding out your model’s maximum capacity, speed, and generation. The online technology store, Newegg, has a service here that can help you find compatible memory types for your machine.

Disclaimer:

While these tips and tricks can help your computer to run games faster, there is a limit to what hardware is capable of. Budget laptops are great for the price point, and these user tricks will help squeeze out all their potential, but some games will simply not run on your machine. Make sure to check a game’s minimum and recommended specs before purchasing/downloading. If your computer falls short of minimum requirements, it might be time to find a different game or upgrade your setup.

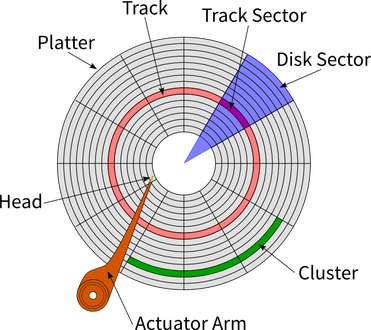

As HDDs have been developed, one key factor that has changed is the orientation of the sectors on the platter. Hard Drives were first designed for “Longitudinal Recording” – meaning the longer side of the platter is oriented horizontally – and since then have utilized a different method called “Perpendicular Recording” where the sectors are stacked on end. This change was made as hard drive manufacturers were hitting a limit on how small they could make each sector due to the “Superparamagnetic Effect.” Essentially, the superparamagnetic effect means that hard drive sectors smaller than a certain size will flip magnetic charge randomly based on temperature. This phenomenon would result in inaccurate data storage, especially given the heat that an operating hard drive emits.

As HDDs have been developed, one key factor that has changed is the orientation of the sectors on the platter. Hard Drives were first designed for “Longitudinal Recording” – meaning the longer side of the platter is oriented horizontally – and since then have utilized a different method called “Perpendicular Recording” where the sectors are stacked on end. This change was made as hard drive manufacturers were hitting a limit on how small they could make each sector due to the “Superparamagnetic Effect.” Essentially, the superparamagnetic effect means that hard drive sectors smaller than a certain size will flip magnetic charge randomly based on temperature. This phenomenon would result in inaccurate data storage, especially given the heat that an operating hard drive emits. In recent years, a new technology has become available on the consumer market which replaces HDDs and the problems they come with. Solid State Drives (SSDs) are another kind of non-volatile memory that simply store a positive charge or no charge in a tiny capacitor. As a result, SSDs are much faster than HDDs as there are no moving parts, and therefore no time to move the read/write arm around. Additionally, no moving parts increases reliability immensely. Solid state drives do have a few downsides, however. Unlike with hard drives, it is difficult to tell when a solid state is failing. Hard drives will slow down over time, or in extreme cases make audible clicking signifying the arm is hitting the platter (in which case your data is most likely gone) while solid states will simply fail without any noticeable warning. Therefore, we must rely on software such as “Samsung Magician” which ships with Samsung’s solid states. The tool works by writing and reading back a piece of data to the drive and checking how fast it is able to do this. If the time it takes to write that data falls below a certain threshold, the software will warn the user that their solid state drive is beginning to fail.

In recent years, a new technology has become available on the consumer market which replaces HDDs and the problems they come with. Solid State Drives (SSDs) are another kind of non-volatile memory that simply store a positive charge or no charge in a tiny capacitor. As a result, SSDs are much faster than HDDs as there are no moving parts, and therefore no time to move the read/write arm around. Additionally, no moving parts increases reliability immensely. Solid state drives do have a few downsides, however. Unlike with hard drives, it is difficult to tell when a solid state is failing. Hard drives will slow down over time, or in extreme cases make audible clicking signifying the arm is hitting the platter (in which case your data is most likely gone) while solid states will simply fail without any noticeable warning. Therefore, we must rely on software such as “Samsung Magician” which ships with Samsung’s solid states. The tool works by writing and reading back a piece of data to the drive and checking how fast it is able to do this. If the time it takes to write that data falls below a certain threshold, the software will warn the user that their solid state drive is beginning to fail.